ONCE: Boosting Content-based Recommendation with Both Open- and Closed-source Large Language Models

Aug. 25th, 2024: Got introduced on the Workshop on Generative AI for Recommender Systems and Personalization!

Oct. 20th, 2023: Got accepted by WSDM ‘24. Our first version (i.e., GENRE) discussed the use of closed-source LLMs (e.g., GPT-3.5) in recommender systems, while this version (i.e., ONCE) further combines open-source LLMs (e.g., LLaMA) and closed-source LLMs in recommender systems.

Qijiong LIU, Nuo CHEN, Tetsuya SAKAI, and Xiao-Ming WU#

[Code] [Paper]

Abstract

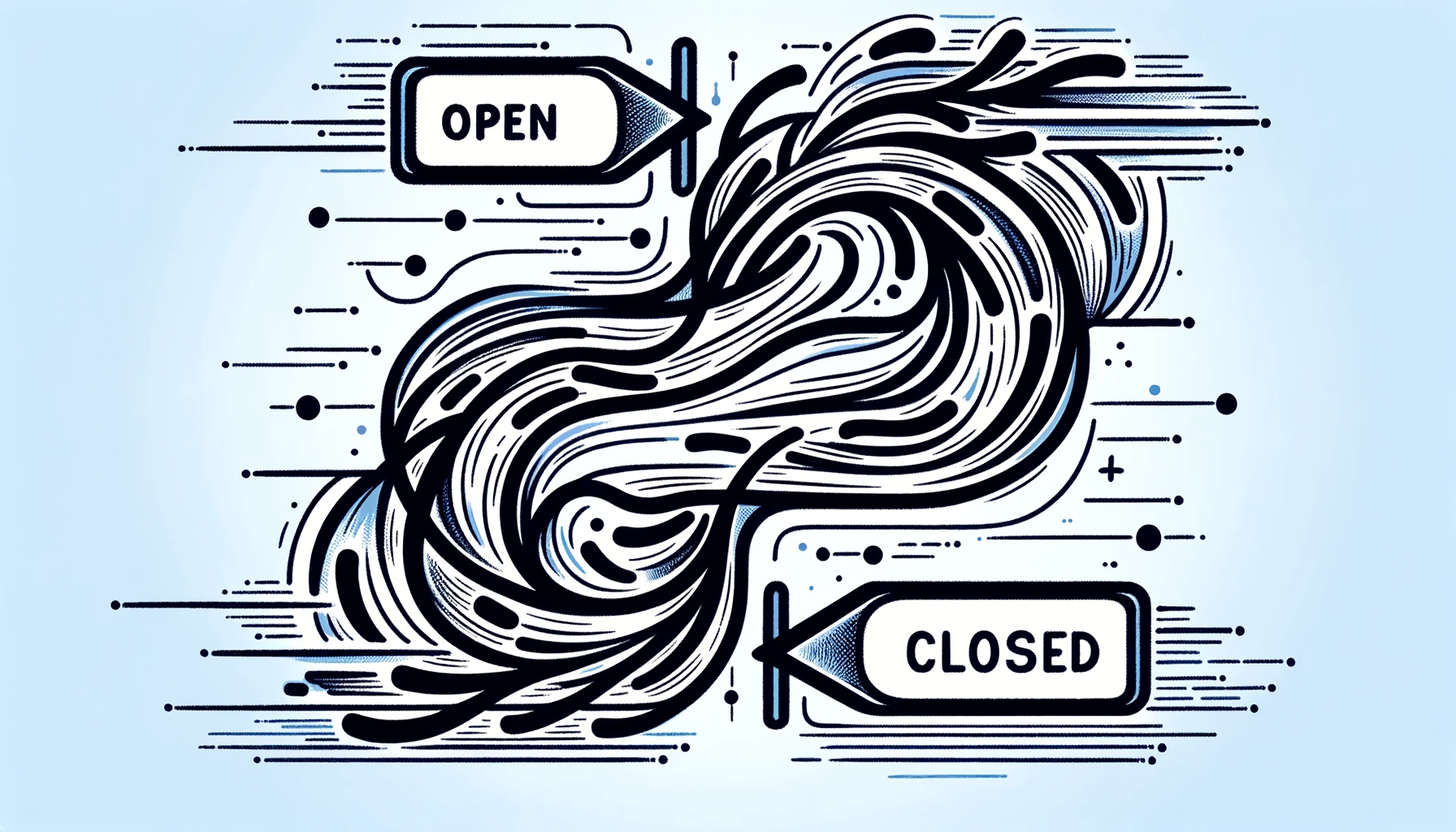

Personalized content-based recommender systems have become indispensable tools for users to navigate through the vast amount of content available on platforms like daily news websites and book recommendation services. However, existing recommenders face significant challenges in understanding the content of items. Large language models (LLMs), which possess deep semantic comprehension and extensive knowledge from pretraining, have proven to be effective in various natural language processing tasks. In this study, we explore the potential of leveraging both open- and closed-source LLMs to enhance content-based recommendation. With open-source LLMs, we utilize their deep layers as content encoders, enriching the representation of content at the embedding level. For closed-source LLMs, we employ prompting techniques to enrich the training data at the token level. Through comprehensive experiments, we demonstrate the high effectiveness of both types of LLMs and show the synergistic relationship between them. Notably, we observed a significant relative improvement of up to 19.32% compared to existing state-of-the-art recommendation models. These findings highlight the immense potential of both open- and closed-source of LLMs in enhancing content-based recommendation systems. We will make our code and LLM-generated data available for other researchers to reproduce our results.

Citation

1 | @inproceedings{liu2023once, |

ONCE: Boosting Content-based Recommendation with Both Open- and Closed-source Large Language Models